Neural Architecture Search (NAS) is an open and challenging problem in machine learning. While NAS offers great promise, the prohibitive computational demand of most of the existing NAS methods makes it difficult to directly search the architectures on large-scale tasks. For this purpose, we propose two contributions: the first analyzes the potential of transferring architectures searched on small datasets to large ones, the second proposes to use polyharmonic splines to directly conduct search at large scale. Our searched model achieved a new state of the art performance on ImageNet22K.

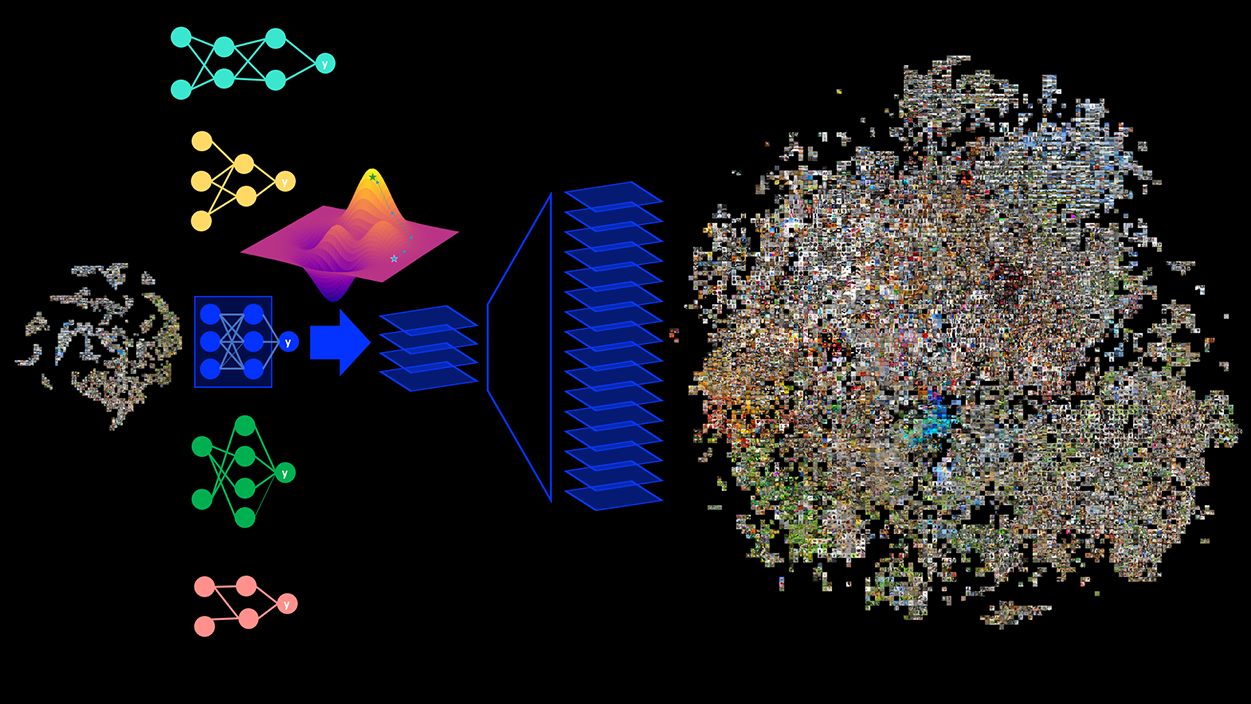

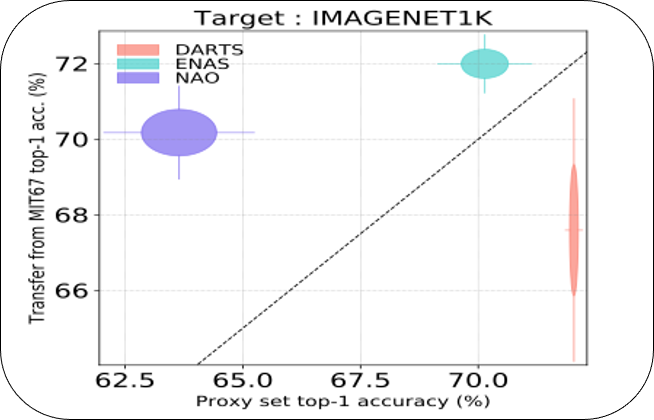

NASTransfer. The typical way of conducting large scale NAS is to search for an architectural building block on a small dataset (either using a proxy set from the large dataset or a completely different small scale dataset) and then transfer the block to a larger dataset. We conduct a comprehensive evaluation of different NAS methods studying the impact of architecture transferability of different NAS methods using different source datasets and training protocols, with a series of experiments on large scale benchmarks such as ImageNet1K and ImageNet22K. We find that: (i) On average, transfer performance of architectures searched using completely different small datasets (e.g., CIFAR10) perform similarly to the architectures searched directly on proxy target datasets. However, design of proxy sets has considerable impact on rankings of different NAS methods. (ii) While different NAS methods show similar performance on a source dataset (e.g., CIFAR10), they significantly differ on the transfer performance to a large dataset (e.g., ImageNet1K). (iii) Even on large datasets, random sampling baseline is very competitive, but the choice of the appropriate combination of proxy set and search strategy can provide significant improvement over it.

NAS with Polyharmonic Splines. We propose a NAS method based on polyharmonic splines that can perform search directly on large scale, imbalanced target datasets. We demonstrate the effectiveness of our method on the ImageNet22K benchmark, which contains 14 million images distributed in a highly imbalanced manner over 21,841 categories. By exploring the search space of the ResNet and Big-Little Net ResNext architectures directly on ImageNet22K, our polyharmonic splines NAS method designed a model which achieved a top-1 accuracy of 40.03% on ImageNet22K, an absolute improvement of 3.13% over the state of the art with similar global batch size.

Rameswar Panda, Michele Merler, Mayoore Jaiswal, Hui Wu, Kandan Ramakrishnan, Ulrich Finkler, Chun-Fu Chen, Minsik Cho, David Kung, Rogerio S Feris, Bishwaranjan Bhattacharjee. NASTransfer: Analyzing Architecture Transferability in Large Scale Neural Architecture Search. 35th AAAI Conference on Artificial Intelligence (AAAI) 2021. arXiv BibTeX Slides